20. Pretraining#

Up until this point, we have been building deep learning models from scratch and mostly training on labelled data to complete a task. A lot of times, especially in chemistry, labelled data is not readily accessible or abundant. In this scenerio, it is helpful to use a pretrained model and leverage the pretrained weights and architecture to learn a new task. In this chapter, we will look into pretraining, how it works, and some applications.

Audience & Objectives

This chapter builds on Standard Layers and Graph Neural Networks. After completing this chapter, you should be able to

Understand why pretraining is useful, and in which situations it is appropriate

Understand transfer learning and fine-tuning

Be able to use a pretrained model for a simple downstream task

20.1. How does pretraining work?#

Pretraining is a training process in which the weights of a model can be trained on a large dataset, for use as a starting place for training on smaller, similar datasets.

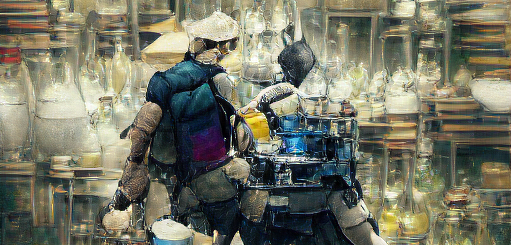

Supervised deep learning models are generally trained on labeled data to achieve a single task. However, for most practical problems, especially in chemistry, labeled examples are limited, imbalanced, or expensive to obtain, whereas unlabeled data is abundant. When labeled data is scarce, supervised learning techniques lead to poor generalization [Mao20]. Instead, in low data regimes, self-supervised learning (SSL) methods (an unsupervised learning approach) are often employed. In SSL, the model is trained on labels that are automatically generated from the data itself. SSL has been largely successful in large language models and computer vision, as well as in chemistry. SSL is the approach used to pre-train models, which can be fine-tuned for downstream tasks, or can be used for transfer learning. The figure below from [ECBV10] shows how pretraining can affect test error.

Fig. 20.1 Test error comparison. Comparing test loss error on MNIST data, with 400 different iterations each. On the left, red and blue correspond to test error for one layer with and without pretraining, respectively. The right image has four layers instead of one.#

20.2. Why does pretraining a model work?#

There are many theoretical reasons for why pretraining works. Pretraining can be seen as a sort of regularization technique, because it initializes parameters and restricts learning to a subset of the parameter space [Mao20, ECBV10]. More specifically, the parameters are initialized so that they are restricted to a better local basin of attraction…a region that captures the structure of the input distribution [YCS+20]. Practically, the parameter space is more constrained as the magnitude of the weights increase during training because the function becomes more nonlinear, and the loss function becomes more topologically complex [YCS+20].

In plainer words, the model collects information about which aspects of the inputs are important, setting the weights accordingly. Then, the model can perform implicit metalearning (helping with hyperparameter choice), and it has been shown that the fine-tuned models’ weights are often not far from the pretrained values [Mao20]. Thus, pretraining can help your model drive the parameters toward the values you actually want for your downstream task.

20.3. Transfer learning vs fine-tuning#

20.3.1. Transfer Learning#

Transfer learning works by taking a pretrained model and freezing the layers and parameters that were already trained. Then you can either add layer(s) on top, or you can modify only the last layer and train it to your new task. In transfer learning, the feature extraction layers from the pretraining process are kept frozen. It is necessary that your data has some connection with the original data.

There are largely two types of transfer learning, and you can find a more formal definition in [Mao20]. The first is transductive transfer learning, where you have the same tasks, but only have labels in the source (pretraining) dataset. For example, imagine training a model to predict the space group of theoretical inorganic crystal structures. Transductive transfer learning could be using this model to predict the space group of self-assembled biochemical structures. You’re using a different dataset, where the only labels are in the inorganic crystal data.

The second type of transfer learning is called inductive transfer learning, where you want to learn a new task, and you have labels for both your source and your target dataset. For example, imagine you train a model to predict solubility of small organic molecules. You could use inductive transfer learning and use this model to predict the pKa of another organic molecule (labeled) dataset. Notice that in both cases, the input type is the same for the source and the target problem. Also, this shouldn’t be too difficult for the model, since you would imagine there would be some relationship between the solubility and the pKa of organic molecules.

20.3.2. Fine-Tuning#

Fine-tuning is a bit different in that instead of freezing the layers and parameters, you retrain either the entire model or parts of the model. So instead of freezing the pre-trained parameters, you use them as a starting point. This can be especially helpful for low-data regimes. However, it is easy to quickly overfit when fine-tuning a pretrained model, especially on a relatively small dataset, so it is important to tune your hyperparameters, such as the learning rate.

For example, SMILES-BERT [WGW+19] is a model pre-trained on SMILES strings via a recovery task. The unlabeled data is SMILES strings, with randomly masked or corrupted tokens. The model is trained to correctly recover the original SMILES string. By learning this task, the model learns to identify important components of the input, which can be applied via fine-tuning to a molecular property prediction downstream task. In this case, the original dataset is unlabeled, and the labels are generated automatically from the data, which is SMILES strings. Then, the target task dataset is SMILES strings with a molecular property label.

For more information on the comparison between transfer learning and fine-tuning, you can check out this youtube video. Also, the figure below gives a layout of fine-tuning and transfer learning. What is important to note is that in transfer learning, we retrain the last layer or add layers on the end, whereas in fine-tuning we can retrain the feature extraction layers also.

Fig. 20.2 Comparison of fine-tuning and transfer learning with a general model architecture. Starting with the top middle block (original model), follow the flow chart for different situations.#

20.4. Pretraining for graph models#

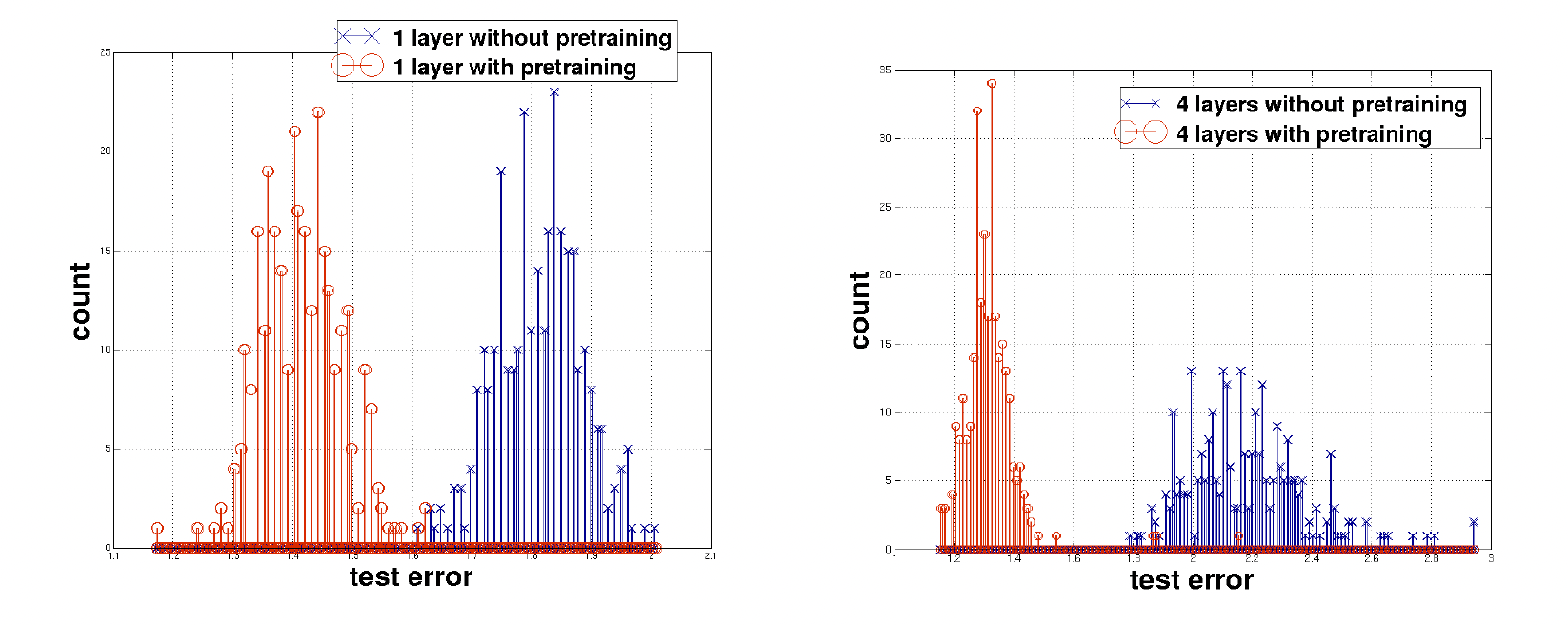

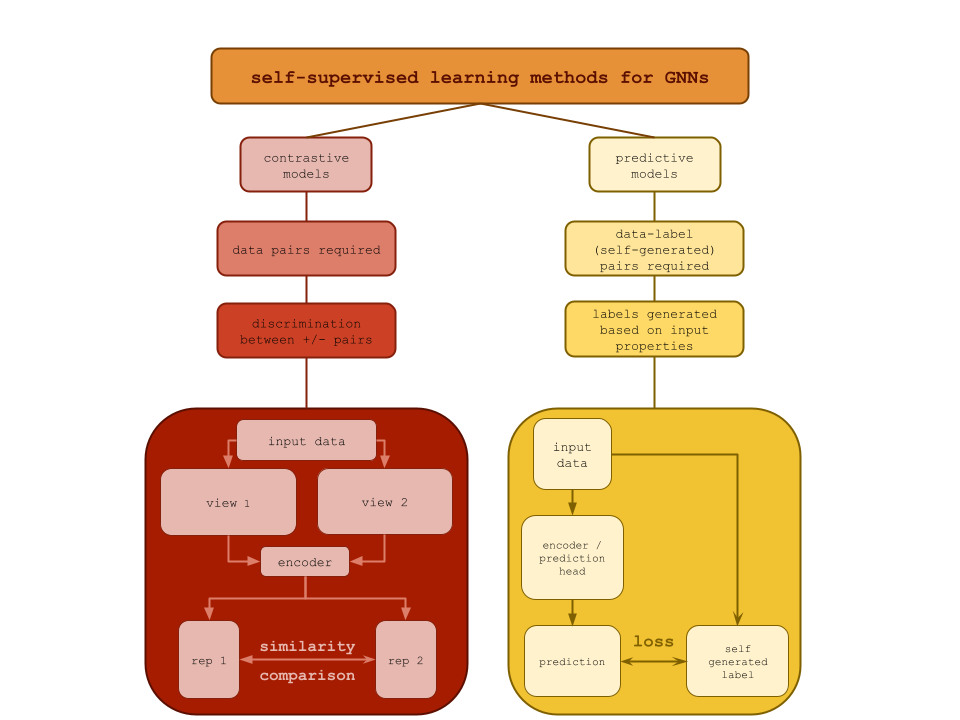

GNNs typically require a large amount of labeled data and are not typically generalizable. Particularly in chemistry, there is a significant amount of unlabeled graph data available. Because of this, SSL has become very popular in GNNs, and it can be broadly split into two categories based on the method: contrastive learning and predictive learning. Predictive models are trained to generate labels based on the input, whereas contrastive models learn to generate diverse and informative information about the input and perform contrastive learning (compare representations) [ZLW+21]. You can see a comparison of the two methods and example architectures in the figure below [XXZ+22].

Contrastive learning is focused on learning to maximize the agreement of features among differently augmented views of the data [YCS+20]. The goal of a contrastive learning approach is for the model to learn representations invariant to the perturbations or augmentations by maximizing the agreement between the base graph and its augmented versions. In other words, if two graphs are similar, the representation should be similar. Likewise, if two graphs are dissimilar, the model learns that the representations should be dissimilar. There have been many approaches to this, including subgraph or motif-based learning, where the model learns to break apart frequent subgraph patterns, such as functional groups [ZLW+21]. Another approach by [YCS+20] combined 4 different data augmentation techniques, similar to how masking is done for large language models, though [SXW+21] found that those random augmentations often changed the global properties of the molecular graph, proposing instead to augment by replacing substructures with bioisosteres.

Another way to think about contrastive learning is that the model looks at one or more encoders and learns that similar graphs should output similar representations, while less similar graphs should have less agreeable representations. Contrastive learning frameworks construct multiple views of each input graph, then an encoder outputs a representation for each view [XXZ+22]. During training, the encoder is trained so that the agreement between representations of the same graph is maximized. In this case, representations from the same instance (same graph) should agree, while representations from separate instances should disagree. The agreement is often measured with Mutual Information, which is a measure of shared information across representations. A thorough discussion of agreement metrics is given in [XXZ+22].

Predictive models, in contrast, train with self-generated labels. This category of model is sometimes called generative models, as graph reconstruction is a popular approach. In graph reconstruction, the graph is distorted in some way (node removed, edge removed, node replaced with another type, etc.), and the model learns to reconstruct the original graph as its output. However, it is not correct to think of predictive models as simply generative models, because graph reconstruction, with an encoder and decoder, is not the only type of predictive model for graphs. Another popular example is property prediction. In property prediction examples, remember that we are still training on unlabeled data, so the property needs to be something implicit in the data, such as the connectivity of two nodes {cite} xie2022self. There won’t be a decoder in this case, because we don’t want a graph as the output.

Fig. 20.3 Comparison of contrastive and predictive models in the context of self-supervised learning for GNNs. On the left, contrastive models require data pairs and discriminate between positive and negative examples, and an example architecture is provided. On the right, predictive models have data(self)-generated labels and predict outputs based on input properties. An example architecture is provided.#

20.5. Running This Notebook#

Click the above to launch this page as an interactive Google Colab. See details below on installing packages.

Tip

To install packages, execute this code in a new cell.

!pip install dmol-book

If you find install problems, you can get the latest working versions of packages used in this book here

Let’s look at a simple example of using a pre-trained model to do transfer learning. We will load a pre-trained model from the huggingface library and use it to predict aqueous solubility of molecules. HuggingFace is an open source platform that enables users to build, train and deploy their deep learning models. We load the ChemBERTa model which was originally trained on SMILES strings from the ZINC-250k dataset. Using the learned representations from ChemBERTa, we can predict aqueous solubility on a smaller dataset.[SKE19]

from transformers import AutoTokenizer, AutoModelForSequenceClassification, Trainer, TrainingArguments

import pandas as pd, sklearn, matplotlib.pyplot as plt, numpy as np, torch

/opt/hostedtoolcache/Python/3.13.11/x64/lib/python3.13/site-packages/tqdm/auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html

from .autonotebook import tqdm as notebook_tqdm

We begin by creating our train and test datasets. The BBB dataset that we use is slightly imbalanced, so we use stratification to make sure both classes are present in train and test sets.

soldata = pd.read_csv(

"https://github.com/whitead/dmol-book/raw/main/data/curated-solubility-dataset.csv"

)

N = int(len(soldata) * 0.1)

sample = soldata.sample(N, replace=False)

train = sample[: int(0.8 * N)]

test = sample[int(0.8 * N) :]

train_dataset = train[["SMILES", "Solubility"]]

train_dataset = train_dataset.rename(columns={"Solubility": "labels", "SMILES": "text"})

test_dataset = test[["SMILES", "Solubility"]]

test_dataset = test_dataset.rename(columns={"Solubility": "labels", "SMILES": "text"})

Next, we initialize a regression model from the ChemBERTa_zinc250k_v2_40k pre-trained model using HuggingFace transformers. We load the tokenizer and a sequence classification model with num_labels=1 (which makes it a regression model). We also create a PyTorch Dataset class for our data. Then we train the model using the solubility dataset.

import logging

logging.getLogger("transformers").setLevel(logging.ERROR)

model_name = "seyonec/ChemBERTa_zinc250k_v2_40k"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForSequenceClassification.from_pretrained(model_name, num_labels=1)

class SolubilityDataset(torch.utils.data.Dataset):

def __init__(self, texts, labels, tokenizer, max_length=128):

self.encodings = tokenizer(texts, truncation=True, padding=True, max_length=max_length)

self.labels = labels

def __getitem__(self, idx):

item = {key: torch.tensor(val[idx]) for key, val in self.encodings.items()}

item["labels"] = torch.tensor(self.labels[idx], dtype=torch.float32)

return item

def __len__(self):

return len(self.labels)

train_ds = SolubilityDataset(train_dataset["text"].tolist(), train_dataset["labels"].tolist(), tokenizer)

test_ds = SolubilityDataset(test_dataset["text"].tolist(), test_dataset["labels"].tolist(), tokenizer)

Warning: You are sending unauthenticated requests to the HF Hub. Please set a HF_TOKEN to enable higher rate limits and faster downloads.

Loading weights: 0%| | 0/101 [00:00<?, ?it/s]

Loading weights: 1%| | 1/101 [00:00<00:00, 16008.79it/s, Materializing param=roberta.embeddings.LayerNorm.bias]

Loading weights: 1%| | 1/101 [00:00<00:00, 2150.93it/s, Materializing param=roberta.embeddings.LayerNorm.bias]

Loading weights: 2%|▏ | 2/101 [00:00<00:00, 1898.30it/s, Materializing param=roberta.embeddings.LayerNorm.weight]

Loading weights: 2%|▏ | 2/101 [00:00<00:00, 1400.90it/s, Materializing param=roberta.embeddings.LayerNorm.weight]

Loading weights: 3%|▎ | 3/101 [00:00<00:00, 1528.35it/s, Materializing param=roberta.embeddings.position_embeddings.weight]

Loading weights: 3%|▎ | 3/101 [00:00<00:00, 1276.93it/s, Materializing param=roberta.embeddings.position_embeddings.weight]

Loading weights: 4%|▍ | 4/101 [00:00<00:00, 1407.96it/s, Materializing param=roberta.embeddings.token_type_embeddings.weight]

Loading weights: 4%|▍ | 4/101 [00:00<00:00, 1243.86it/s, Materializing param=roberta.embeddings.token_type_embeddings.weight]

Loading weights: 5%|▍ | 5/101 [00:00<00:00, 1342.35it/s, Materializing param=roberta.embeddings.word_embeddings.weight]

Loading weights: 5%|▍ | 5/101 [00:00<00:00, 1179.04it/s, Materializing param=roberta.embeddings.word_embeddings.weight]

Loading weights: 6%|▌ | 6/101 [00:00<00:00, 1273.70it/s, Materializing param=roberta.encoder.layer.0.attention.output.LayerNorm.bias]

Loading weights: 6%|▌ | 6/101 [00:00<00:00, 1178.06it/s, Materializing param=roberta.encoder.layer.0.attention.output.LayerNorm.bias]

Loading weights: 7%|▋ | 7/101 [00:00<00:00, 1244.55it/s, Materializing param=roberta.encoder.layer.0.attention.output.LayerNorm.weight]

Loading weights: 7%|▋ | 7/101 [00:00<00:00, 1157.69it/s, Materializing param=roberta.encoder.layer.0.attention.output.LayerNorm.weight]

Loading weights: 8%|▊ | 8/101 [00:00<00:00, 1224.21it/s, Materializing param=roberta.encoder.layer.0.attention.output.dense.bias]

Loading weights: 8%|▊ | 8/101 [00:00<00:00, 1120.01it/s, Materializing param=roberta.encoder.layer.0.attention.output.dense.bias]

Loading weights: 9%|▉ | 9/101 [00:00<00:00, 1182.12it/s, Materializing param=roberta.encoder.layer.0.attention.output.dense.weight]

Loading weights: 9%|▉ | 9/101 [00:00<00:00, 1119.87it/s, Materializing param=roberta.encoder.layer.0.attention.output.dense.weight]

Loading weights: 10%|▉ | 10/101 [00:00<00:00, 1169.31it/s, Materializing param=roberta.encoder.layer.0.attention.self.key.bias]

Loading weights: 10%|▉ | 10/101 [00:00<00:00, 1114.32it/s, Materializing param=roberta.encoder.layer.0.attention.self.key.bias]

Loading weights: 11%|█ | 11/101 [00:00<00:00, 1144.22it/s, Materializing param=roberta.encoder.layer.0.attention.self.key.weight]

Loading weights: 11%|█ | 11/101 [00:00<00:00, 1098.46it/s, Materializing param=roberta.encoder.layer.0.attention.self.key.weight]

Loading weights: 12%|█▏ | 12/101 [00:00<00:00, 992.38it/s, Materializing param=roberta.encoder.layer.0.attention.self.query.bias]

Loading weights: 12%|█▏ | 12/101 [00:00<00:00, 961.68it/s, Materializing param=roberta.encoder.layer.0.attention.self.query.bias]

Loading weights: 13%|█▎ | 13/101 [00:00<00:00, 997.09it/s, Materializing param=roberta.encoder.layer.0.attention.self.query.weight]

Loading weights: 13%|█▎ | 13/101 [00:00<00:00, 969.14it/s, Materializing param=roberta.encoder.layer.0.attention.self.query.weight]

Loading weights: 14%|█▍ | 14/101 [00:00<00:00, 1003.13it/s, Materializing param=roberta.encoder.layer.0.attention.self.value.bias]

Loading weights: 14%|█▍ | 14/101 [00:00<00:00, 975.95it/s, Materializing param=roberta.encoder.layer.0.attention.self.value.bias]

Loading weights: 15%|█▍ | 15/101 [00:00<00:00, 1007.28it/s, Materializing param=roberta.encoder.layer.0.attention.self.value.weight]

Loading weights: 15%|█▍ | 15/101 [00:00<00:00, 981.14it/s, Materializing param=roberta.encoder.layer.0.attention.self.value.weight]

Loading weights: 16%|█▌ | 16/101 [00:00<00:00, 1011.96it/s, Materializing param=roberta.encoder.layer.0.intermediate.dense.bias]

Loading weights: 16%|█▌ | 16/101 [00:00<00:00, 987.13it/s, Materializing param=roberta.encoder.layer.0.intermediate.dense.bias]

Loading weights: 17%|█▋ | 17/101 [00:00<00:00, 1015.27it/s, Materializing param=roberta.encoder.layer.0.intermediate.dense.weight]

Loading weights: 17%|█▋ | 17/101 [00:00<00:00, 986.72it/s, Materializing param=roberta.encoder.layer.0.intermediate.dense.weight]

Loading weights: 18%|█▊ | 18/101 [00:00<00:00, 1017.86it/s, Materializing param=roberta.encoder.layer.0.output.LayerNorm.bias]

Loading weights: 18%|█▊ | 18/101 [00:00<00:00, 996.00it/s, Materializing param=roberta.encoder.layer.0.output.LayerNorm.bias]

Loading weights: 19%|█▉ | 19/101 [00:00<00:00, 1022.82it/s, Materializing param=roberta.encoder.layer.0.output.LayerNorm.weight]

Loading weights: 19%|█▉ | 19/101 [00:00<00:00, 1002.66it/s, Materializing param=roberta.encoder.layer.0.output.LayerNorm.weight]

Loading weights: 20%|█▉ | 20/101 [00:00<00:00, 1027.45it/s, Materializing param=roberta.encoder.layer.0.output.dense.bias]

Loading weights: 20%|█▉ | 20/101 [00:00<00:00, 1006.83it/s, Materializing param=roberta.encoder.layer.0.output.dense.bias]

Loading weights: 21%|██ | 21/101 [00:00<00:00, 1027.48it/s, Materializing param=roberta.encoder.layer.0.output.dense.weight]

Loading weights: 21%|██ | 21/101 [00:00<00:00, 1009.84it/s, Materializing param=roberta.encoder.layer.0.output.dense.weight]

Loading weights: 22%|██▏ | 22/101 [00:00<00:00, 1034.24it/s, Materializing param=roberta.encoder.layer.1.attention.output.LayerNorm.bias]

Loading weights: 22%|██▏ | 22/101 [00:00<00:00, 1015.55it/s, Materializing param=roberta.encoder.layer.1.attention.output.LayerNorm.bias]

Loading weights: 23%|██▎ | 23/101 [00:00<00:00, 1037.83it/s, Materializing param=roberta.encoder.layer.1.attention.output.LayerNorm.weight]

Loading weights: 23%|██▎ | 23/101 [00:00<00:00, 1020.93it/s, Materializing param=roberta.encoder.layer.1.attention.output.LayerNorm.weight]

Loading weights: 24%|██▍ | 24/101 [00:00<00:00, 1035.79it/s, Materializing param=roberta.encoder.layer.1.attention.output.dense.bias]

Loading weights: 24%|██▍ | 24/101 [00:00<00:00, 1018.03it/s, Materializing param=roberta.encoder.layer.1.attention.output.dense.bias]

Loading weights: 25%|██▍ | 25/101 [00:00<00:00, 1036.82it/s, Materializing param=roberta.encoder.layer.1.attention.output.dense.weight]

Loading weights: 25%|██▍ | 25/101 [00:00<00:00, 1017.38it/s, Materializing param=roberta.encoder.layer.1.attention.output.dense.weight]

Loading weights: 26%|██▌ | 26/101 [00:00<00:00, 1037.41it/s, Materializing param=roberta.encoder.layer.1.attention.self.key.bias]

Loading weights: 26%|██▌ | 26/101 [00:00<00:00, 1020.53it/s, Materializing param=roberta.encoder.layer.1.attention.self.key.bias]

Loading weights: 27%|██▋ | 27/101 [00:00<00:00, 1038.72it/s, Materializing param=roberta.encoder.layer.1.attention.self.key.weight]

Loading weights: 27%|██▋ | 27/101 [00:00<00:00, 1022.80it/s, Materializing param=roberta.encoder.layer.1.attention.self.key.weight]

Loading weights: 28%|██▊ | 28/101 [00:00<00:00, 1039.51it/s, Materializing param=roberta.encoder.layer.1.attention.self.query.bias]

Loading weights: 28%|██▊ | 28/101 [00:00<00:00, 1025.58it/s, Materializing param=roberta.encoder.layer.1.attention.self.query.bias]

Loading weights: 29%|██▊ | 29/101 [00:00<00:00, 1043.36it/s, Materializing param=roberta.encoder.layer.1.attention.self.query.weight]

Loading weights: 29%|██▊ | 29/101 [00:00<00:00, 1027.34it/s, Materializing param=roberta.encoder.layer.1.attention.self.query.weight]

Loading weights: 30%|██▉ | 30/101 [00:00<00:00, 1041.80it/s, Materializing param=roberta.encoder.layer.1.attention.self.value.bias]

Loading weights: 30%|██▉ | 30/101 [00:00<00:00, 1027.06it/s, Materializing param=roberta.encoder.layer.1.attention.self.value.bias]

Loading weights: 31%|███ | 31/101 [00:00<00:00, 1043.63it/s, Materializing param=roberta.encoder.layer.1.attention.self.value.weight]

Loading weights: 31%|███ | 31/101 [00:00<00:00, 1029.07it/s, Materializing param=roberta.encoder.layer.1.attention.self.value.weight]

Loading weights: 32%|███▏ | 32/101 [00:00<00:00, 1045.47it/s, Materializing param=roberta.encoder.layer.1.intermediate.dense.bias]

Loading weights: 32%|███▏ | 32/101 [00:00<00:00, 1030.55it/s, Materializing param=roberta.encoder.layer.1.intermediate.dense.bias]

Loading weights: 33%|███▎ | 33/101 [00:00<00:00, 1045.79it/s, Materializing param=roberta.encoder.layer.1.intermediate.dense.weight]

Loading weights: 33%|███▎ | 33/101 [00:00<00:00, 1033.18it/s, Materializing param=roberta.encoder.layer.1.intermediate.dense.weight]

Loading weights: 34%|███▎ | 34/101 [00:00<00:00, 1047.96it/s, Materializing param=roberta.encoder.layer.1.output.LayerNorm.bias]

Loading weights: 34%|███▎ | 34/101 [00:00<00:00, 1035.53it/s, Materializing param=roberta.encoder.layer.1.output.LayerNorm.bias]

Loading weights: 35%|███▍ | 35/101 [00:00<00:00, 1050.97it/s, Materializing param=roberta.encoder.layer.1.output.LayerNorm.weight]

Loading weights: 35%|███▍ | 35/101 [00:00<00:00, 1038.88it/s, Materializing param=roberta.encoder.layer.1.output.LayerNorm.weight]

Loading weights: 36%|███▌ | 36/101 [00:00<00:00, 1052.93it/s, Materializing param=roberta.encoder.layer.1.output.dense.bias]

Loading weights: 36%|███▌ | 36/101 [00:00<00:00, 1041.46it/s, Materializing param=roberta.encoder.layer.1.output.dense.bias]

Loading weights: 37%|███▋ | 37/101 [00:00<00:00, 1054.42it/s, Materializing param=roberta.encoder.layer.1.output.dense.weight]

Loading weights: 37%|███▋ | 37/101 [00:00<00:00, 1043.05it/s, Materializing param=roberta.encoder.layer.1.output.dense.weight]

Loading weights: 38%|███▊ | 38/101 [00:00<00:00, 1054.57it/s, Materializing param=roberta.encoder.layer.2.attention.output.LayerNorm.bias]

Loading weights: 38%|███▊ | 38/101 [00:00<00:00, 1041.95it/s, Materializing param=roberta.encoder.layer.2.attention.output.LayerNorm.bias]

Loading weights: 39%|███▊ | 39/101 [00:00<00:00, 1053.81it/s, Materializing param=roberta.encoder.layer.2.attention.output.LayerNorm.weight]

Loading weights: 39%|███▊ | 39/101 [00:00<00:00, 1042.29it/s, Materializing param=roberta.encoder.layer.2.attention.output.LayerNorm.weight]

Loading weights: 40%|███▉ | 40/101 [00:00<00:00, 1053.98it/s, Materializing param=roberta.encoder.layer.2.attention.output.dense.bias]

Loading weights: 40%|███▉ | 40/101 [00:00<00:00, 1043.43it/s, Materializing param=roberta.encoder.layer.2.attention.output.dense.bias]

Loading weights: 41%|████ | 41/101 [00:00<00:00, 1055.06it/s, Materializing param=roberta.encoder.layer.2.attention.output.dense.weight]

Loading weights: 41%|████ | 41/101 [00:00<00:00, 1043.26it/s, Materializing param=roberta.encoder.layer.2.attention.output.dense.weight]

Loading weights: 42%|████▏ | 42/101 [00:00<00:00, 1054.74it/s, Materializing param=roberta.encoder.layer.2.attention.self.key.bias]

Loading weights: 42%|████▏ | 42/101 [00:00<00:00, 1044.36it/s, Materializing param=roberta.encoder.layer.2.attention.self.key.bias]

Loading weights: 43%|████▎ | 43/101 [00:00<00:00, 1054.66it/s, Materializing param=roberta.encoder.layer.2.attention.self.key.weight]

Loading weights: 43%|████▎ | 43/101 [00:00<00:00, 1043.89it/s, Materializing param=roberta.encoder.layer.2.attention.self.key.weight]

Loading weights: 44%|████▎ | 44/101 [00:00<00:00, 1053.92it/s, Materializing param=roberta.encoder.layer.2.attention.self.query.bias]

Loading weights: 44%|████▎ | 44/101 [00:00<00:00, 1044.20it/s, Materializing param=roberta.encoder.layer.2.attention.self.query.bias]

Loading weights: 45%|████▍ | 45/101 [00:00<00:00, 1054.28it/s, Materializing param=roberta.encoder.layer.2.attention.self.query.weight]

Loading weights: 45%|████▍ | 45/101 [00:00<00:00, 1044.45it/s, Materializing param=roberta.encoder.layer.2.attention.self.query.weight]

Loading weights: 46%|████▌ | 46/101 [00:00<00:00, 1055.86it/s, Materializing param=roberta.encoder.layer.2.attention.self.value.bias]

Loading weights: 46%|████▌ | 46/101 [00:00<00:00, 1045.88it/s, Materializing param=roberta.encoder.layer.2.attention.self.value.bias]

Loading weights: 47%|████▋ | 47/101 [00:00<00:00, 1056.15it/s, Materializing param=roberta.encoder.layer.2.attention.self.value.weight]

Loading weights: 47%|████▋ | 47/101 [00:00<00:00, 1047.03it/s, Materializing param=roberta.encoder.layer.2.attention.self.value.weight]

Loading weights: 48%|████▊ | 48/101 [00:00<00:00, 1057.84it/s, Materializing param=roberta.encoder.layer.2.intermediate.dense.bias]

Loading weights: 48%|████▊ | 48/101 [00:00<00:00, 1048.05it/s, Materializing param=roberta.encoder.layer.2.intermediate.dense.bias]

Loading weights: 49%|████▊ | 49/101 [00:00<00:00, 1058.56it/s, Materializing param=roberta.encoder.layer.2.intermediate.dense.weight]

Loading weights: 49%|████▊ | 49/101 [00:00<00:00, 1048.72it/s, Materializing param=roberta.encoder.layer.2.intermediate.dense.weight]

Loading weights: 50%|████▉ | 50/101 [00:00<00:00, 1058.69it/s, Materializing param=roberta.encoder.layer.2.output.LayerNorm.bias]

Loading weights: 50%|████▉ | 50/101 [00:00<00:00, 1049.08it/s, Materializing param=roberta.encoder.layer.2.output.LayerNorm.bias]

Loading weights: 50%|█████ | 51/101 [00:00<00:00, 1058.44it/s, Materializing param=roberta.encoder.layer.2.output.LayerNorm.weight]

Loading weights: 50%|█████ | 51/101 [00:00<00:00, 1050.27it/s, Materializing param=roberta.encoder.layer.2.output.LayerNorm.weight]

Loading weights: 51%|█████▏ | 52/101 [00:00<00:00, 1058.40it/s, Materializing param=roberta.encoder.layer.2.output.dense.bias]

Loading weights: 51%|█████▏ | 52/101 [00:00<00:00, 1049.59it/s, Materializing param=roberta.encoder.layer.2.output.dense.bias]

Loading weights: 52%|█████▏ | 53/101 [00:00<00:00, 882.07it/s, Materializing param=roberta.encoder.layer.2.output.dense.weight]

Loading weights: 52%|█████▏ | 53/101 [00:00<00:00, 877.46it/s, Materializing param=roberta.encoder.layer.2.output.dense.weight]

Loading weights: 53%|█████▎ | 54/101 [00:00<00:00, 886.11it/s, Materializing param=roberta.encoder.layer.3.attention.output.LayerNorm.bias]

Loading weights: 53%|█████▎ | 54/101 [00:00<00:00, 880.18it/s, Materializing param=roberta.encoder.layer.3.attention.output.LayerNorm.bias]

Loading weights: 54%|█████▍ | 55/101 [00:00<00:00, 888.55it/s, Materializing param=roberta.encoder.layer.3.attention.output.LayerNorm.weight]

Loading weights: 54%|█████▍ | 55/101 [00:00<00:00, 883.18it/s, Materializing param=roberta.encoder.layer.3.attention.output.LayerNorm.weight]

Loading weights: 55%|█████▌ | 56/101 [00:00<00:00, 891.57it/s, Materializing param=roberta.encoder.layer.3.attention.output.dense.bias]

Loading weights: 55%|█████▌ | 56/101 [00:00<00:00, 886.27it/s, Materializing param=roberta.encoder.layer.3.attention.output.dense.bias]

Loading weights: 56%|█████▋ | 57/101 [00:00<00:00, 894.38it/s, Materializing param=roberta.encoder.layer.3.attention.output.dense.weight]

Loading weights: 56%|█████▋ | 57/101 [00:00<00:00, 888.95it/s, Materializing param=roberta.encoder.layer.3.attention.output.dense.weight]

Loading weights: 57%|█████▋ | 58/101 [00:00<00:00, 896.16it/s, Materializing param=roberta.encoder.layer.3.attention.self.key.bias]

Loading weights: 57%|█████▋ | 58/101 [00:00<00:00, 890.47it/s, Materializing param=roberta.encoder.layer.3.attention.self.key.bias]

Loading weights: 58%|█████▊ | 59/101 [00:00<00:00, 899.73it/s, Materializing param=roberta.encoder.layer.3.attention.self.key.weight]

Loading weights: 58%|█████▊ | 59/101 [00:00<00:00, 892.78it/s, Materializing param=roberta.encoder.layer.3.attention.self.key.weight]

Loading weights: 59%|█████▉ | 60/101 [00:00<00:00, 901.43it/s, Materializing param=roberta.encoder.layer.3.attention.self.query.bias]

Loading weights: 59%|█████▉ | 60/101 [00:00<00:00, 896.17it/s, Materializing param=roberta.encoder.layer.3.attention.self.query.bias]

Loading weights: 60%|██████ | 61/101 [00:00<00:00, 902.88it/s, Materializing param=roberta.encoder.layer.3.attention.self.query.weight]

Loading weights: 60%|██████ | 61/101 [00:00<00:00, 898.55it/s, Materializing param=roberta.encoder.layer.3.attention.self.query.weight]

Loading weights: 61%|██████▏ | 62/101 [00:00<00:00, 906.52it/s, Materializing param=roberta.encoder.layer.3.attention.self.value.bias]

Loading weights: 61%|██████▏ | 62/101 [00:00<00:00, 901.03it/s, Materializing param=roberta.encoder.layer.3.attention.self.value.bias]

Loading weights: 62%|██████▏ | 63/101 [00:00<00:00, 908.71it/s, Materializing param=roberta.encoder.layer.3.attention.self.value.weight]

Loading weights: 62%|██████▏ | 63/101 [00:00<00:00, 903.74it/s, Materializing param=roberta.encoder.layer.3.attention.self.value.weight]

Loading weights: 63%|██████▎ | 64/101 [00:00<00:00, 911.24it/s, Materializing param=roberta.encoder.layer.3.intermediate.dense.bias]

Loading weights: 63%|██████▎ | 64/101 [00:00<00:00, 906.03it/s, Materializing param=roberta.encoder.layer.3.intermediate.dense.bias]

Loading weights: 64%|██████▍ | 65/101 [00:00<00:00, 913.44it/s, Materializing param=roberta.encoder.layer.3.intermediate.dense.weight]

Loading weights: 64%|██████▍ | 65/101 [00:00<00:00, 902.25it/s, Materializing param=roberta.encoder.layer.3.intermediate.dense.weight]

Loading weights: 65%|██████▌ | 66/101 [00:00<00:00, 910.89it/s, Materializing param=roberta.encoder.layer.3.output.LayerNorm.bias]

Loading weights: 65%|██████▌ | 66/101 [00:00<00:00, 905.81it/s, Materializing param=roberta.encoder.layer.3.output.LayerNorm.bias]

Loading weights: 66%|██████▋ | 67/101 [00:00<00:00, 912.46it/s, Materializing param=roberta.encoder.layer.3.output.LayerNorm.weight]

Loading weights: 66%|██████▋ | 67/101 [00:00<00:00, 906.62it/s, Materializing param=roberta.encoder.layer.3.output.LayerNorm.weight]

Loading weights: 67%|██████▋ | 68/101 [00:00<00:00, 913.52it/s, Materializing param=roberta.encoder.layer.3.output.dense.bias]

Loading weights: 67%|██████▋ | 68/101 [00:00<00:00, 908.55it/s, Materializing param=roberta.encoder.layer.3.output.dense.bias]

Loading weights: 68%|██████▊ | 69/101 [00:00<00:00, 915.22it/s, Materializing param=roberta.encoder.layer.3.output.dense.weight]

Loading weights: 68%|██████▊ | 69/101 [00:00<00:00, 910.22it/s, Materializing param=roberta.encoder.layer.3.output.dense.weight]

Loading weights: 69%|██████▉ | 70/101 [00:00<00:00, 917.44it/s, Materializing param=roberta.encoder.layer.4.attention.output.LayerNorm.bias]

Loading weights: 69%|██████▉ | 70/101 [00:00<00:00, 912.09it/s, Materializing param=roberta.encoder.layer.4.attention.output.LayerNorm.bias]

Loading weights: 70%|███████ | 71/101 [00:00<00:00, 918.71it/s, Materializing param=roberta.encoder.layer.4.attention.output.LayerNorm.weight]

Loading weights: 70%|███████ | 71/101 [00:00<00:00, 914.11it/s, Materializing param=roberta.encoder.layer.4.attention.output.LayerNorm.weight]

Loading weights: 71%|███████▏ | 72/101 [00:00<00:00, 889.20it/s, Materializing param=roberta.encoder.layer.4.attention.output.dense.bias]

Loading weights: 71%|███████▏ | 72/101 [00:00<00:00, 885.05it/s, Materializing param=roberta.encoder.layer.4.attention.output.dense.bias]

Loading weights: 72%|███████▏ | 73/101 [00:00<00:00, 891.49it/s, Materializing param=roberta.encoder.layer.4.attention.output.dense.weight]

Loading weights: 72%|███████▏ | 73/101 [00:00<00:00, 886.98it/s, Materializing param=roberta.encoder.layer.4.attention.output.dense.weight]

Loading weights: 73%|███████▎ | 74/101 [00:00<00:00, 892.97it/s, Materializing param=roberta.encoder.layer.4.attention.self.key.bias]

Loading weights: 73%|███████▎ | 74/101 [00:00<00:00, 888.43it/s, Materializing param=roberta.encoder.layer.4.attention.self.key.bias]

Loading weights: 74%|███████▍ | 75/101 [00:00<00:00, 895.33it/s, Materializing param=roberta.encoder.layer.4.attention.self.key.weight]

Loading weights: 74%|███████▍ | 75/101 [00:00<00:00, 890.54it/s, Materializing param=roberta.encoder.layer.4.attention.self.key.weight]

Loading weights: 75%|███████▌ | 76/101 [00:00<00:00, 897.21it/s, Materializing param=roberta.encoder.layer.4.attention.self.query.bias]

Loading weights: 75%|███████▌ | 76/101 [00:00<00:00, 892.78it/s, Materializing param=roberta.encoder.layer.4.attention.self.query.bias]

Loading weights: 76%|███████▌ | 77/101 [00:00<00:00, 899.26it/s, Materializing param=roberta.encoder.layer.4.attention.self.query.weight]

Loading weights: 76%|███████▌ | 77/101 [00:00<00:00, 894.88it/s, Materializing param=roberta.encoder.layer.4.attention.self.query.weight]

Loading weights: 77%|███████▋ | 78/101 [00:00<00:00, 901.38it/s, Materializing param=roberta.encoder.layer.4.attention.self.value.bias]

Loading weights: 77%|███████▋ | 78/101 [00:00<00:00, 897.30it/s, Materializing param=roberta.encoder.layer.4.attention.self.value.bias]

Loading weights: 78%|███████▊ | 79/101 [00:00<00:00, 903.43it/s, Materializing param=roberta.encoder.layer.4.attention.self.value.weight]

Loading weights: 78%|███████▊ | 79/101 [00:00<00:00, 898.86it/s, Materializing param=roberta.encoder.layer.4.attention.self.value.weight]

Loading weights: 79%|███████▉ | 80/101 [00:00<00:00, 904.88it/s, Materializing param=roberta.encoder.layer.4.intermediate.dense.bias]

Loading weights: 79%|███████▉ | 80/101 [00:00<00:00, 900.94it/s, Materializing param=roberta.encoder.layer.4.intermediate.dense.bias]

Loading weights: 80%|████████ | 81/101 [00:00<00:00, 906.96it/s, Materializing param=roberta.encoder.layer.4.intermediate.dense.weight]

Loading weights: 80%|████████ | 81/101 [00:00<00:00, 902.45it/s, Materializing param=roberta.encoder.layer.4.intermediate.dense.weight]

Loading weights: 81%|████████ | 82/101 [00:00<00:00, 908.79it/s, Materializing param=roberta.encoder.layer.4.output.LayerNorm.bias]

Loading weights: 81%|████████ | 82/101 [00:00<00:00, 904.46it/s, Materializing param=roberta.encoder.layer.4.output.LayerNorm.bias]

Loading weights: 82%|████████▏ | 83/101 [00:00<00:00, 910.33it/s, Materializing param=roberta.encoder.layer.4.output.LayerNorm.weight]

Loading weights: 82%|████████▏ | 83/101 [00:00<00:00, 905.22it/s, Materializing param=roberta.encoder.layer.4.output.LayerNorm.weight]

Loading weights: 83%|████████▎ | 84/101 [00:00<00:00, 910.90it/s, Materializing param=roberta.encoder.layer.4.output.dense.bias]

Loading weights: 83%|████████▎ | 84/101 [00:00<00:00, 905.81it/s, Materializing param=roberta.encoder.layer.4.output.dense.bias]

Loading weights: 84%|████████▍ | 85/101 [00:00<00:00, 899.22it/s, Materializing param=roberta.encoder.layer.4.output.dense.weight]

Loading weights: 84%|████████▍ | 85/101 [00:00<00:00, 896.02it/s, Materializing param=roberta.encoder.layer.4.output.dense.weight]

Loading weights: 85%|████████▌ | 86/101 [00:00<00:00, 902.27it/s, Materializing param=roberta.encoder.layer.5.attention.output.LayerNorm.bias]

Loading weights: 85%|████████▌ | 86/101 [00:00<00:00, 897.85it/s, Materializing param=roberta.encoder.layer.5.attention.output.LayerNorm.bias]

Loading weights: 86%|████████▌ | 87/101 [00:00<00:00, 902.81it/s, Materializing param=roberta.encoder.layer.5.attention.output.LayerNorm.weight]

Loading weights: 86%|████████▌ | 87/101 [00:00<00:00, 898.82it/s, Materializing param=roberta.encoder.layer.5.attention.output.LayerNorm.weight]

Loading weights: 87%|████████▋ | 88/101 [00:00<00:00, 904.32it/s, Materializing param=roberta.encoder.layer.5.attention.output.dense.bias]

Loading weights: 87%|████████▋ | 88/101 [00:00<00:00, 899.61it/s, Materializing param=roberta.encoder.layer.5.attention.output.dense.bias]

Loading weights: 88%|████████▊ | 89/101 [00:00<00:00, 893.71it/s, Materializing param=roberta.encoder.layer.5.attention.output.dense.weight]

Loading weights: 88%|████████▊ | 89/101 [00:00<00:00, 890.22it/s, Materializing param=roberta.encoder.layer.5.attention.output.dense.weight]

Loading weights: 89%|████████▉ | 90/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.attention.output.dense.weight]

Loading weights: 89%|████████▉ | 90/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.attention.self.key.bias]

Loading weights: 89%|████████▉ | 90/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.attention.self.key.bias]

Loading weights: 90%|█████████ | 91/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.attention.self.key.weight]

Loading weights: 90%|█████████ | 91/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.attention.self.key.weight]

Loading weights: 91%|█████████ | 92/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.attention.self.query.bias]

Loading weights: 91%|█████████ | 92/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.attention.self.query.bias]

Loading weights: 92%|█████████▏| 93/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.attention.self.query.weight]

Loading weights: 92%|█████████▏| 93/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.attention.self.query.weight]

Loading weights: 93%|█████████▎| 94/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.attention.self.value.bias]

Loading weights: 93%|█████████▎| 94/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.attention.self.value.bias]

Loading weights: 94%|█████████▍| 95/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.attention.self.value.weight]

Loading weights: 94%|█████████▍| 95/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.attention.self.value.weight]

Loading weights: 95%|█████████▌| 96/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.intermediate.dense.bias]

Loading weights: 95%|█████████▌| 96/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.intermediate.dense.bias]

Loading weights: 96%|█████████▌| 97/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.intermediate.dense.weight]

Loading weights: 96%|█████████▌| 97/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.intermediate.dense.weight]

Loading weights: 97%|█████████▋| 98/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.output.LayerNorm.bias]

Loading weights: 97%|█████████▋| 98/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.output.LayerNorm.bias]

Loading weights: 98%|█████████▊| 99/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.output.LayerNorm.weight]

Loading weights: 98%|█████████▊| 99/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.output.LayerNorm.weight]

Loading weights: 99%|█████████▉| 100/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.output.dense.bias]

Loading weights: 99%|█████████▉| 100/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.output.dense.bias]

Loading weights: 100%|██████████| 101/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.output.dense.weight]

Loading weights: 100%|██████████| 101/101 [00:00<00:00, 895.26it/s, Materializing param=roberta.encoder.layer.5.output.dense.weight]

Loading weights: 100%|██████████| 101/101 [00:00<00:00, 873.43it/s, Materializing param=roberta.encoder.layer.5.output.dense.weight]

training_args = TrainingArguments(

output_dir="./chemberta_solubility",

num_train_epochs=5,

per_device_train_batch_size=16,

per_device_eval_batch_size=16,

logging_steps=50,

use_cpu=True,

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_ds,

eval_dataset=test_ds,

)

trainer.train()

{'loss': '3.632', 'grad_norm': '28.32', 'learning_rate': '4.02e-05', 'epoch': '1'}

{'loss': '2.122', 'grad_norm': '25.37', 'learning_rate': '3.02e-05', 'epoch': '2'}

{'loss': '1.299', 'grad_norm': '57.46', 'learning_rate': '2.02e-05', 'epoch': '3'}

{'loss': '0.9429', 'grad_norm': '13.79', 'learning_rate': '1.02e-05', 'epoch': '4'}

{'loss': '0.6868', 'grad_norm': '27.63', 'learning_rate': '2e-07', 'epoch': '5'}

Writing model shards: 0%| | 0/1 [00:00<?, ?it/s]

Writing model shards: 100%|██████████| 1/1 [00:00<00:00, 5.53it/s]

Writing model shards: 100%|██████████| 1/1 [00:00<00:00, 5.51it/s]

{'train_runtime': '603.8', 'train_samples_per_second': '6.608', 'train_steps_per_second': '0.414', 'train_loss': '1.736', 'epoch': '5'}

TrainOutput(global_step=250, training_loss=1.7364631805419921, metrics={'train_runtime': 603.8067, 'train_samples_per_second': 6.608, 'train_steps_per_second': 0.414, 'train_loss': 1.7364631805419921, 'epoch': 5.0})

Now we evaluate the trained model on our test set.

eval_result = trainer.evaluate()

print(eval_result)

{'eval_loss': '1.722', 'eval_runtime': '7.985', 'eval_samples_per_second': '25.05', 'eval_steps_per_second': '1.628', 'epoch': '5'}

{'eval_loss': 1.7221847772598267, 'eval_runtime': 7.9853, 'eval_samples_per_second': 25.046, 'eval_steps_per_second': 1.628, 'epoch': 5.0}

output = trainer.predict(test_ds)

predictions = output.predictions.squeeze()

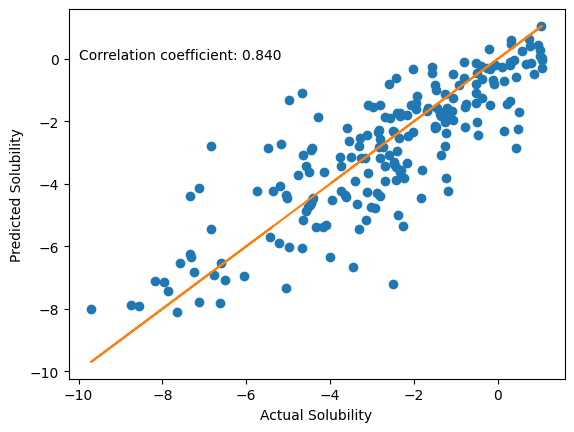

plt.scatter(test_dataset["labels"].tolist(), predictions, color="C0")

plt.plot(test_dataset["labels"], test_dataset["labels"], color="C1")

plt.text(

-10,

0.0,

f"Correlation coefficient: {np.corrcoef(test_dataset['labels'], predictions)[0,1]:.3f}",

)

plt.xlabel("Actual Solubility")

plt.ylabel("Predicted Solubility")

plt.show()

The model performs quite well on our test set. We have fine-tuned the pretrained model for a task that it was not trained for. This shows that even though the original model was trained on the ZINC dataset, the input representations can be used to make predictions on another dataset, with a different task. Using pre-trained models saves time and effort spent in training the model. To further improve performance on this silubility prediction task, you can change some other parameters like the learning rate or add additional layers before the output layer.

20.6. Cited References#

Murat Cihan Sorkun, Abhishek Khetan, and Süleyman Er. AqSolDB, a curated reference set of aqueous solubility and 2D descriptors for a diverse set of compounds. Sci. Data, 6(1):143, 2019. doi:10.1038/s41597-019-0151-1.

Huanru Henry Mao. A survey on self-supervised pre-training for sequential transfer learning in neural networks. arXiv preprint arXiv:2007.00800, 2020.

Dumitru Erhan, Aaron Courville, Yoshua Bengio, and Pascal Vincent. Why does unsupervised pre-training help deep learning? In Proceedings of the thirteenth international conference on artificial intelligence and statistics, 201–208. JMLR Workshop and Conference Proceedings, 2010.

Yuning You, Tianlong Chen, Yongduo Sui, Ting Chen, Zhangyang Wang, and Yang Shen. Graph contrastive learning with augmentations. Advances in Neural Information Processing Systems, 33:5812–5823, 2020.

Sheng Wang, Yuzhi Guo, Yuhong Wang, Hongmao Sun, and Junzhou Huang. Smiles-bert: large scale unsupervised pre-training for molecular property prediction. In Proceedings of the 10th ACM international conference on bioinformatics, computational biology and health informatics, 429–436. 2019.

Zaixi Zhang, Qi Liu, Hao Wang, Chengqiang Lu, and Chee-Kong Lee. Motif-based graph self-supervised learning for molecular property prediction. Advances in Neural Information Processing Systems, 34:15870–15882, 2021.

Yaochen Xie, Zhao Xu, Jingtun Zhang, Zhengyang Wang, and Shuiwang Ji. Self-supervised learning of graph neural networks: a unified review. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

Mengying Sun, Jing Xing, Huijun Wang, Bin Chen, and Jiayu Zhou. Mocl: data-driven molecular fingerprint via knowledge-aware contrastive learning from molecular graph. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, 3585–3594. 2021.