Deep Learning on Sequences

Contents

13. Deep Learning on Sequences#

Deep learning on sequences is part of a broader long-term effort in machine learning on sequences. Sequences is a broad term that includes text, integer sequences, DNA, and other ordered data. Deep learning on sequences often intersects with another field called natural language processing (NLP). NLP is a much broader field than deep learning, but there is quite a bit of overlap with sequence modeling.

We’ll focus on the application of deep learning on sequences and NLP to molecules and materials. NLP in chemistry would at first appear to be a rich area, especially with the large amount of historic chemistry data existing only in plain text. However, the most work in this area has been on representations of molecules as text via the SMILES[Wei88] (and recently SELFIES [KHN+20]) encoding. There is nothing natural about SMILES and SELFIES though, so we should be careful to discriminate between work on natural language (like identifying names of compounds in a research article) from predicting the solubility of a compound from its SMILES. I hope there will be more NLP in the area, but publishers prevent bulk access/ML on publications. Few corpuses (collections of natural language documents) exist for NLP on chemistry articles.

Audience & Objectives

This chapter builds on Standard Layers and Attention Layers. After completing this chapter, you should be able to

Define natural language processing and sequence modeling

Recognize and be able to encode molecules into SMILES or other string encodings

Understand RNNs and know some layer types

Construct RNNs in seq2seq or seq2vec configurations

Know the transformer architecture

Understand how design can be made easier in a latent space

One advantage of working with molecules as text relative to graph neural networks (GNNs) is that existing ML frameworks have many more features for working with text, due to the strong connection between NLP and sequence modeling. Another reason is that it is easier to train generative models, because generating valid text is easier than generating valid graphs. You’ll thus see generative/unsupervised learning of chemical space more often done with sequence models, whereas GNNs are typically better for supervised learning tasks and can incorporate spatial features (e.g., [YCW20, KGrossGunnemann20]). Outside of deep learning, graphical representations are in viewed as more robust than text encodings when used in methods like genetic algorithms and chemical space exploration [BFSV19]. NLP with sequence models can also be used to understand natural language descriptions of materials and molecules, which is essential for materials that are defined with more than just the molecular structure.

In sequence modeling, unsupervised learning is very common. We can predict the probability of the next token (word or character) in a sequence, like guessing the next word in a sentence. This does not require labels, because we only need examples of the sequences. It is also called pre-training, because it precedes training on sequences with labels. For chemistry this could be predicting the next atom in a SMILES string. For materials, this might be predicting the next word in a synthesis procedure. These pre-trained have statistical model of a language and can be fine-tuned (trained a second time) for a more specific task, like predicting if a molecule will bind to a protein.

Another common task in sequence modeling (inluding NLP) is to convert sequences into continuous vectors. This doesn’t always involve deep learning. Models that can embed sequences into a vector space are often called seq2vec or x2vec, where x might be molecule or synthesis procedure.

Finally, we often see translation tasks where we go from one sequence language to another. A sequence to sequence model (seq2seq) is similar to pre-training because it actually predicts probabilities for the output sequence.

13.1. Converting Molecules into Text#

Before we can begin to use neural networks, we need to convert molecules into text. Simplified molecular-input line-entry system (SMILES) is a de facto standard for converting molecules into a string. SMILES enables molecular structures to be correctly saved in spreadsheets, databases, and input to models that work on sequences like text. Here’s an example SMILES string: CC(NC)CC1=CC=C(OCO2)C2=C1. SMILES was crucial to the field of cheminformatics and is widely used today beyond deep learning. Some of the first deep learning work was with SMILES strings because of the ability to apply NLP models to SMILES strings.

Let us imagine SMILES as a function whose domain is molecular graphs (or some equivalent complete description of a molecule) and the image is a string. This can be thought of as an encoder that converts a molecular graph into a string. The SMILES encoder function is not surjective – there are many strings that cannot be reached from decoding graphs. The SMILES encoder function is injective – each graph has a different SMILES string. The inverse of this function, the SMILES decoder, cannot have the domain of all strings because some strings do not decode to valid molecular graphs. This is because of the syntax rules of SMILES. Thus, we can regard the domain to be restricted to valid SMILES string. In that case, the decoder is surjective – all graphs are reachable via a SMILES string. The decoder is not injective – multiple graphs can be reached by SMILES string.

This last point, the non-injectivity of a SMILES decoder, is a problem identified in database storage and retrieval of compounds. Since multiple SMILES strings map to the same molecular graph, it can happen that multiple entries in a database are actually the same molecule. One way around this is canonicalization which is a modification to the encoder to make a unique SMILES string. It can fail though [OBoyle12]. If we restrict ourselves to valid, canonical SMILES, then the SMILES decoder function is injective and surjective – bijective.

The difficulty of canonicalization and thus perceived weakness of SMILES in creating unique strings led (in part) to the creation of InChi strings. InChI is an alternative that is inherently canonical. InChI strings are typically longer and involve more tokens, which seems to affect their use in deep learning. InChI as a representation is often worse with the same amount of data vs SMILES.

If you’ve read the previous chapters on equivariances (Input Data & Equivariances and Equivariant Neural Networks), a natural question is if SMILES is permutation invariant. That is, if you change the order of atoms in the molecular graph that has no effect on chemistry, is the SMILES string identical? Yes, if you use the canonical SMILES. So in a supervised setting, using canonical SMILES gives an atom ordering permutation invariant neural network because the representation will not be permuted after canonicalization. Be careful; you should not trust that SMILES you find in a datset are canonical .

13.1.1. SELFIES#

Recent work from Krenn et al. developed an alternative approach to SMILES called SELF-referencIng Embedded Strings (SELFIES)[KHN+20]. Every string is a valid molecule. Note that the characters in SELFIES are not all ASCII characters, so it’s not like every sentence encodes a molecule (would be cool though). SELFIES is an excellent choice for generative models because any SELFIES string automatically decodes to a valid molecule. SELFIES, as of 2021, is not directly canonicalized though and thus is not permutation invariant by itself. However, if you add canonical SMILES as an intermediate step, then SELFIES are canonical. It seems that models which output a molecule (generative or supervised) benefit from using SELFIES instead of SMILES because the model does not need to learn how to make valid strings – all strings are already valid SELFIES [RZS20]. This benefit is less clear in supervised learning and no difference has been observed empirically[CGR20]. Here’s a blog post giving an overview of SELFIES and its applications.

13.1.1.1. Demo#

You can get a sense for SMILES and SELFIES in this demo page that uses a RNN (discussed below) to generate SMILES and SELFIES strings.

13.1.2. Stereochemistry#

SMILES and SELFIES can treat stereoisomers, but there are a few complications. rdkit, the dominant Python package, cannot treat non-tetrahedral chiral centers with SMILES as of 2022. For example, even though SMILES according to its specification can correctly distinguish cisplatin and transplatin, the implementation of SMILES in rdkit cannot. Other examples of chirality that are present in the SMILES specification but not implementations are planar and axial chirality. SELFIES relies on SMILES (most often the rdkit implementation) and thus is also susceptible to this problem. This is an issue for any organometallic compounds. In organic chemistry though, most chirality is tetrahedral and correctly treated by rdkit.

13.1.3. Other Ideas#

Recent work by Kim et al. [KNM+22] has shown that we may actually be able to directly insert the graph as a sequence into a sequence neural network without needing to make a decision like using SMILES or SELFIES. They basically add a special character/embedding for noting if a piece of a graph is a node or edge.

13.1.4. What is a chemical bond?#

More broadly, the idea of a chemical bond is a concept created by chemists [Bal11]. You cannot measure the existence of a chemical bond in the lab and it is not some quantum mechanical operator with an observable. There are certain molecules which cannot be represented by classic single,double,triple,aromatic bonded representations, like ferrocene or diborane. This bleeds over to text encoding of a molecule where the bonding topology doesn’t map neatly to bond order. The specific issue this can cause is that multiple unique molecules may appear to have the same encoding (non-injective). In situations like this, it is probably better to just work with the exact 3D coordinates and then bond order or type is less important than distance between atoms.

13.2. Running This Notebook#

Click the above to launch this page as an interactive Google Colab. See details below on installing packages.

Tip

To install packages, execute this code in a new cell.

!pip install dmol-book

If you find install problems, you can get the latest working versions of packages used in this book here

13.3. Recurrent Neural Networks#

Recurrent neural networks (RNN) have been by far the most popular approach to working with molecular strings. RNNs have a critical property that they can have different length input sequences, making it appropriate for SMILES or SELFIES which both have variable length. RNNs have recurrent layers that consume an input sequence element-by-element. Consider an input sequence \(\mathbf{X}\) which is composed of a series of vectors (recall that characters or words can be represented with one-hot or embedding vectors) \(\mathbf{X} = \left[\vec{x}_0, \vec{x}_1,\ldots,\vec{x}_L\right]\). The RNN layer function is binary and takes as input the \(i\)th element of the input sequence and the output from the \(i - 1\) layer function. You can write it as:

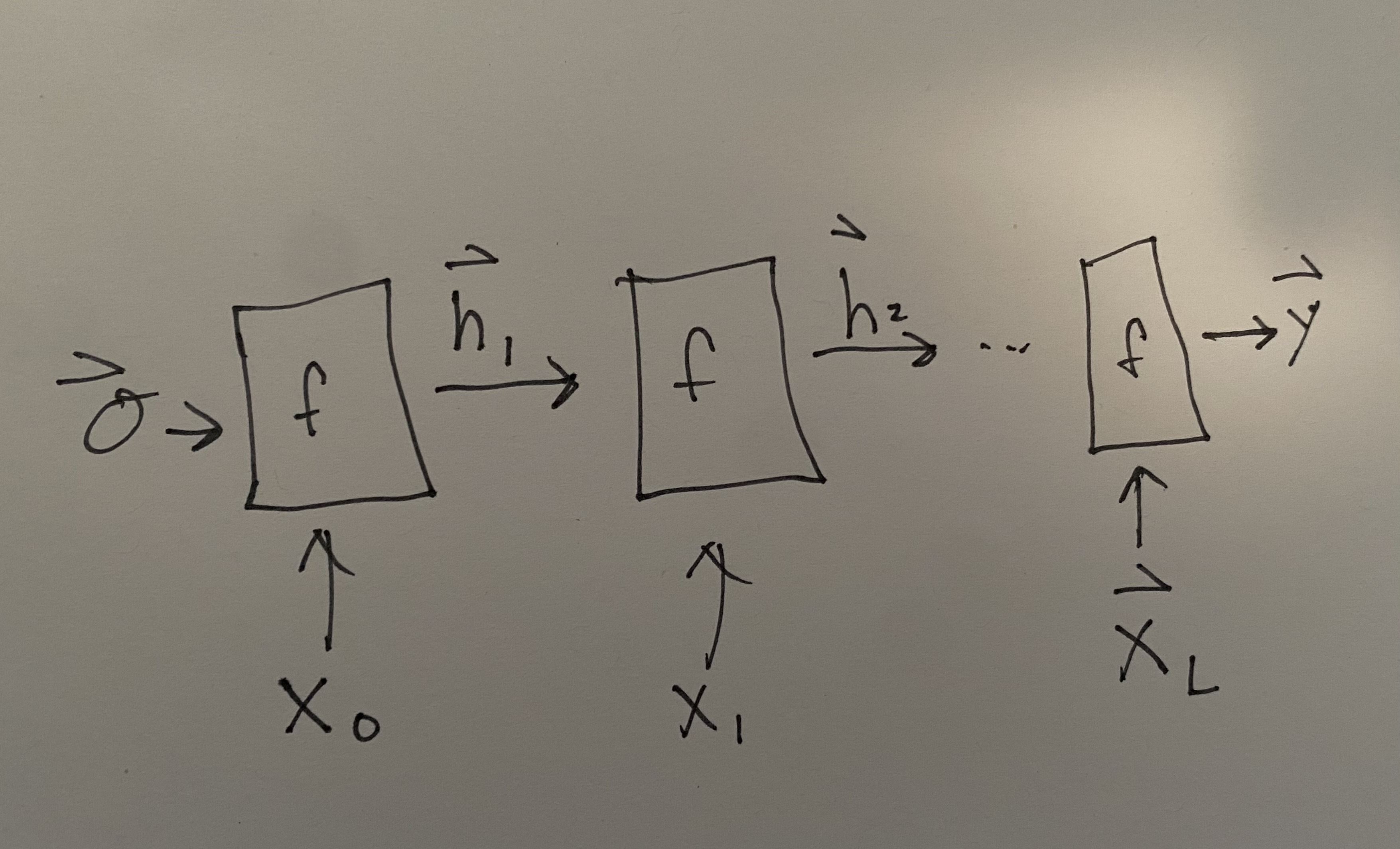

Commonly we would like to actually see and look at the these intermediate outputs from the layer function \(f_4(\vec{x}_4, f_3(\ldots)) = \vec{h}_4\). These \(\vec{h}\)s are called the hidden state because of the connection between RNNs and Markov State Models. We can unroll our picture of an RNN to be:

Fig. 13.1 Unrolled picture of RNN.#

where the initial hidden state is assumed to be \(\vec{0}\), but could be trained. The output at the end is shown as \(\vec{y}\). Notice there are no subscripts on \(f\) because we use the same function and weights at each step. This re-use of weights makes the choice of parameter number independent of input lengths, which is also necessary to make the RNN accommodate arbitrary length input sequences. It should be noted that the length of \(\vec{y}\) may be a function of the input length, so that the \(\vec{h}_i\) may be increasing in length at each step to enable an output \(\vec{y}\). Some diagrams of RNNs will show that by indicating a growing output sequence as an additional output from \(f(\vec{x}_i, h_{i-1})\).

Interestingly, the form of \(f(\vec{x}, \vec{h})\) is quite flexible based on the discussion above. There have been hundreds of ideas for the function \(f\) and it is problem dependent. The two most common are long short-term memory (LSTM) units and gated recurrent unit (GRU). You can spend quite a bit of time trying to reason about these functions, understanding how gradients propagate nicely through them, and there is an analogy about how they are inspired by human memory. Ultimately, they are used because they perform well and are widely-implemented so we do not need to spend much time on these details. The main thing to know is that GRUs are simpler and faster, but LSTMs seem to be better at more difficult sequences. Note that \(\vec{h}\) is typically 1-3 different quantities in modern implementations. Another details is the word units. Units are like the hidden state dimension, but because the hidden state could be multiple quantities (e.g., LSTM) we do not call it dimension.

The RNN layer allows us to input an arbitrary length sequence and outputs a label which could depend on the length of the input sequence. You can imagine that this could be used for regression or classification. \(\hat{y}\) would be a scalar. Or you could take the output from an RNN layer into an MLP to get a class.

13.3.1. Generative RNNs#

An interesting use case for an RNN is in unsupervised generative models, where we try to predict new examples. This means that we’re trying to learn \(P(\mathbf{X})\) [SKTW18]. With a generative RNN, we predict the sequence one symbol at a time by conditioning on a growing sequence. This is called autoregressive generation.

The RNN is trained to take as input a sequence and output the probability for the next character. Our network is trained to be this conditional probability: \(P(\vec{x}_i | \vec{x}_{L - i}, \vec{x}_{L - i}, \ldots, \vec{x}_0)\). What about the \(P(\vec{x}_0)\) term? Typically we just pick what the first character should be. Or, we could create an artificial “start” character that marks the beginning of a sequence (typically 0) and always choose that.

We can train the RNN to agree with \(P(\vec{x}_i | \vec{x}_{L - i}, \vec{x}_{L - i}, \ldots, \vec{x}_0)\) by taking an arbitrary sequence \(\vec{x}\) and choosing a split point \(\vec{x}_i\) and training on the proceeding sequence elements. This is just multi-class classification. The number of classes is the number of available characters and our model should output a probability vector across the classes. Recall the loss for this cross-entropy.

When doing this process with SMILES an obvious way to judge success would be if the generated sequences are valid SMILES strings. This at first seems reasonable and was used as a benchmark for years in this topic. However, this is a low-bar: we can find valid SMILES in much more efficient ways. You can download 77 million SMILES [CGR20] and you can find vendors that will give you a multi-million entry database of purchasable molecules. You can also just use SELFIES and then an untrained RNN will generate only valid strings, since SELFIES is bijective. A more interesting metric is to assess if your generated molecules are in the same region of chemical space as the training data[SKTW18]. I believe though that generative RNNs are relatively poor compared with other generative models in 2021. They are still strong though when composed with other architectures, like VAEs [GomezBWD+18] or encoder/decoder [RZS20].

You can see a worked out example in Generative RNN in Browser.

13.4. Masking & Padding#

As in our Graph Neural Networks chapter, we run into issues with variable length inputs. The easiest and most compute efficient way to treat this is to pad (and/or trim) all strings to be the same length, making it easy to batch examples. A memory efficient way is to not batch and either batch gradients as a separate step or trim your sequences into subsequences and save the RNN hidden-state between them. Due to the way that NVIDIA has written RNN kernels, padding should always be done on the right (sequences all begin at index 0). The character used for padding is typically 0. Don’t forget, we will always first convert our string characters to integers corresponding to indices of our vocabulary (see Standard Layers). Thus, remember to make sure that the index 0 should be reserved for padding.

Masking is used for two things. Masking is used to ensure that the padded values are not accidentally considered in training. This is framework dependent and you can read about Keras here, which is what we’ll use. The second use for masking is to do element-by-element training like the generative RNN. We train each time with a shorter mask, enabling it to see more of the sequence. This prevents you from needing to slice-up the training examples into many shorter sequences. This idea of a right-mask that prevents the model for using characters farther in the sequence is sometimes called causal masking because we’re preventing characters from the “future” affecting the model.

13.5. RNN Solubility Example#

Let’s revisit our solubility example from before. We’ll use a GRU to encode the SMILES string into a vector and then apply a dense layer to get a scalar value for solubility. Let’s revisit the solubility AqSolDB[SKE19] dataset from Regression & Model Assessment. Recall it has about 10,000 unique compounds with measured solubility in water (label) and their SMILES strings. Many of the steps below are explained in the Standard Layers chapter that introduces Keras and the principles of building a deep model.

I’ve hidden the cell below which sets-up our imports and shown a few rows of the dataset.

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

import dmol

soldata = pd.read_csv(

"https://github.com/whitead/dmol-book/raw/main/data/curated-solubility-dataset.csv"

)

features_start_at = list(soldata.columns).index("MolWt")

np.random.seed(0)

soldata.head()

| ID | Name | InChI | InChIKey | SMILES | Solubility | SD | Ocurrences | Group | MolWt | ... | NumRotatableBonds | NumValenceElectrons | NumAromaticRings | NumSaturatedRings | NumAliphaticRings | RingCount | TPSA | LabuteASA | BalabanJ | BertzCT | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | A-3 | N,N,N-trimethyloctadecan-1-aminium bromide | InChI=1S/C21H46N.BrH/c1-5-6-7-8-9-10-11-12-13-... | SZEMGTQCPRNXEG-UHFFFAOYSA-M | [Br-].CCCCCCCCCCCCCCCCCC[N+](C)(C)C | -3.616127 | 0.0 | 1 | G1 | 392.510 | ... | 17.0 | 142.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.00 | 158.520601 | 0.000000e+00 | 210.377334 |

| 1 | A-4 | Benzo[cd]indol-2(1H)-one | InChI=1S/C11H7NO/c13-11-8-5-1-3-7-4-2-6-9(12-1... | GPYLCFQEKPUWLD-UHFFFAOYSA-N | O=C1Nc2cccc3cccc1c23 | -3.254767 | 0.0 | 1 | G1 | 169.183 | ... | 0.0 | 62.0 | 2.0 | 0.0 | 1.0 | 3.0 | 29.10 | 75.183563 | 2.582996e+00 | 511.229248 |

| 2 | A-5 | 4-chlorobenzaldehyde | InChI=1S/C7H5ClO/c8-7-3-1-6(5-9)2-4-7/h1-5H | AVPYQKSLYISFPO-UHFFFAOYSA-N | Clc1ccc(C=O)cc1 | -2.177078 | 0.0 | 1 | G1 | 140.569 | ... | 1.0 | 46.0 | 1.0 | 0.0 | 0.0 | 1.0 | 17.07 | 58.261134 | 3.009782e+00 | 202.661065 |

| 3 | A-8 | zinc bis[2-hydroxy-3,5-bis(1-phenylethyl)benzo... | InChI=1S/2C23H22O3.Zn/c2*1-15(17-9-5-3-6-10-17... | XTUPUYCJWKHGSW-UHFFFAOYSA-L | [Zn++].CC(c1ccccc1)c2cc(C(C)c3ccccc3)c(O)c(c2)... | -3.924409 | 0.0 | 1 | G1 | 756.226 | ... | 10.0 | 264.0 | 6.0 | 0.0 | 0.0 | 6.0 | 120.72 | 323.755434 | 2.322963e-07 | 1964.648666 |

| 4 | A-9 | 4-({4-[bis(oxiran-2-ylmethyl)amino]phenyl}meth... | InChI=1S/C25H30N2O4/c1-5-20(26(10-22-14-28-22)... | FAUAZXVRLVIARB-UHFFFAOYSA-N | C1OC1CN(CC2CO2)c3ccc(Cc4ccc(cc4)N(CC5CO5)CC6CO... | -4.662065 | 0.0 | 1 | G1 | 422.525 | ... | 12.0 | 164.0 | 2.0 | 4.0 | 4.0 | 6.0 | 56.60 | 183.183268 | 1.084427e+00 | 769.899934 |

5 rows × 26 columns

We’ll extract our labels and convert SMILES into padded characters. We make use of a tokenizer, which is essentially a look-up table for how to go from the characters in a SMILES string to integers. To make our model run faster, I will filter out very long SMILES strings.

# filter out long smiles

smask = [len(s) <= 96 for s in soldata.SMILES]

print(f"Removed {soldata.shape[0] - sum(smask)} long SMILES strings")

filtered_soldata = soldata[smask]

# make tokenizer with 128 size vocab and

# have it examine all text in dataset

vocab_size = 128

tokenizer = tf.keras.preprocessing.text.Tokenizer(

vocab_size, filters="", char_level=True

)

tokenizer.fit_on_texts(filtered_soldata.SMILES)

Removed 285 long SMILES strings

# now get padded sequences

seqs = tokenizer.texts_to_sequences(filtered_soldata.SMILES)

padded_seqs = tf.keras.preprocessing.sequence.pad_sequences(seqs, padding="post")

# Now build dataset

data = tf.data.Dataset.from_tensor_slices((padded_seqs, filtered_soldata.Solubility))

# now split into val, test, train and batch

N = soldata.shape[0]

split = int(0.1 * N)

test_data = data.take(split).batch(16)

nontest = data.skip(split)

val_data, train_data = nontest.take(split).batch(16), nontest.skip(split).shuffle(

1000

).batch(16)

We’re now ready to build our model. We will just use an embedding then RNN and some dense layers to get to a final predicted solubility.

model = tf.keras.Sequential()

# make embedding and indicate that 0 should be treated as padding mask

model.add(

tf.keras.layers.Embedding(input_dim=vocab_size, output_dim=16, mask_zero=True)

)

# RNN layer

model.add(tf.keras.layers.GRU(32))

# a dense hidden layer

model.add(tf.keras.layers.Dense(32, activation="relu"))

# regression, so no activation

model.add(tf.keras.layers.Dense(1))

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding (Embedding) (None, None, 16) 2048

gru (GRU) (None, 32) 4800

dense (Dense) (None, 32) 1056

dense_1 (Dense) (None, 1) 33

=================================================================

Total params: 7,937

Trainable params: 7,937

Non-trainable params: 0

_________________________________________________________________

Now we’ll compile our model and train it. This is a regression problem, so we use mean squared error for our loss.

model.compile(tf.optimizers.Adam(1e-2), loss="mean_squared_error")

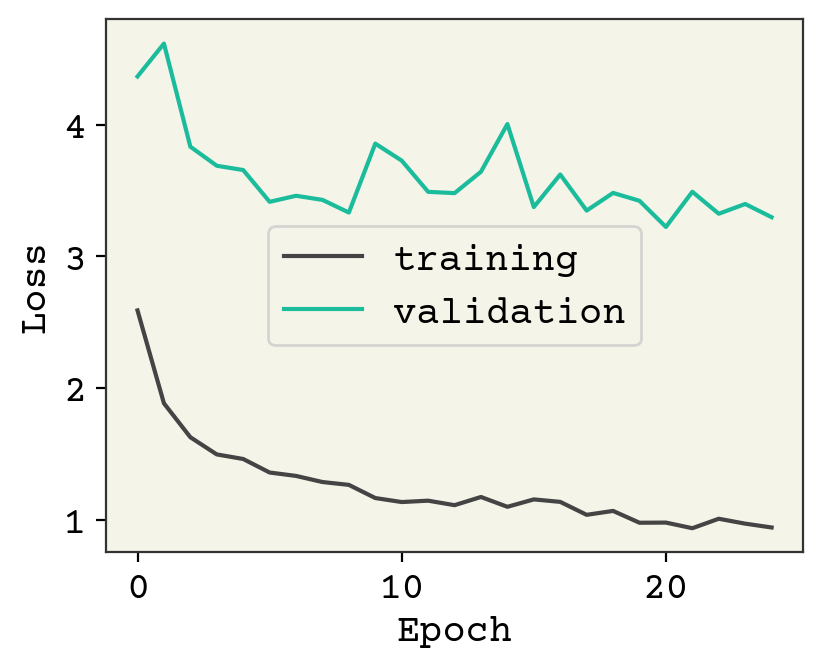

result = model.fit(train_data, validation_data=val_data, epochs=25, verbose=0)

plt.plot(result.history["loss"], label="training")

plt.plot(result.history["val_loss"], label="validation")

plt.legend()

plt.xlabel("Epoch")

plt.ylabel("Loss")

plt.show()

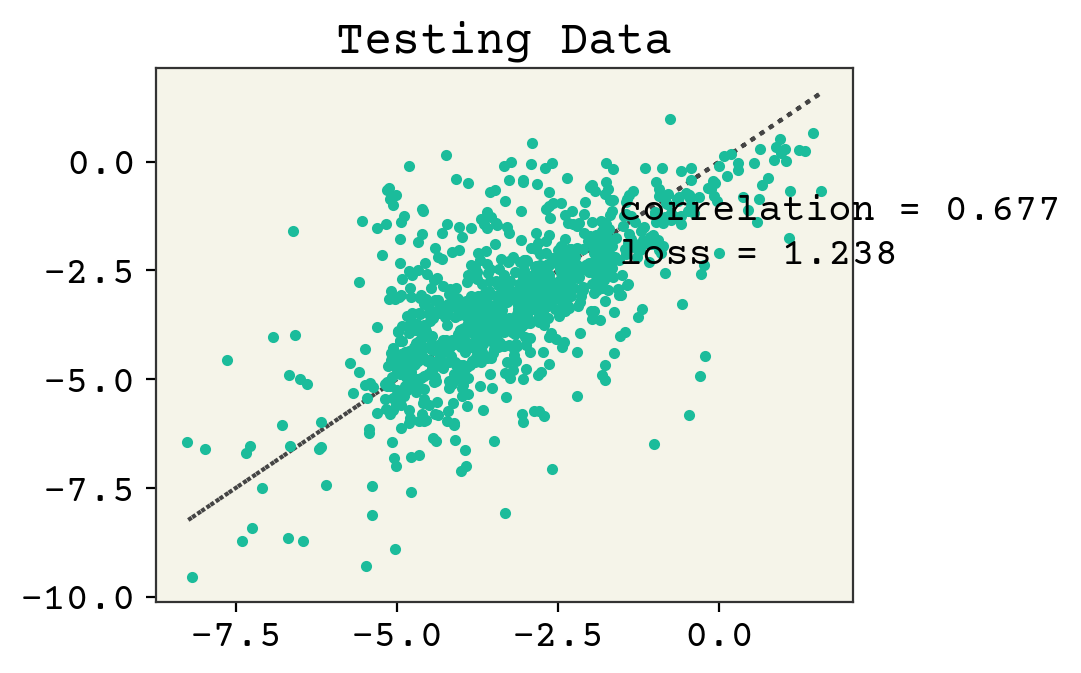

As usual, we could keep training and I encourage you to explore adding regularization or modifying the architecture. Let’s now see how the test data looks.

# evaluate on test data

yhat = []

test_y = []

for x, y in test_data:

yhat.extend(model(x).numpy().flatten())

test_y.extend(y.numpy().flatten())

yhat = np.array(yhat)

test_y = np.array(test_y)

# plot test data

plt.plot(test_y, test_y, ":")

plt.plot(test_y, yhat, ".")

plt.text(min(y) + 1, max(y) - 2, f"correlation = {np.corrcoef(test_y, yhat)[0,1]:.3f}")

plt.text(min(y) + 1, max(y) - 3, f"loss = {np.sqrt(np.mean((test_y - yhat)**2)):.3f}")

plt.title("Testing Data")

plt.show()

Linear regression from Regression & Model Assessment still wins, but this demonstrates the use of an RNN for this task.

13.6. Transformers#

Transformers have been well-established now as the current state of the art for language modeling tasks. The transformer architecture is actually just multi-headed attention blocks repeated in multiple layers. The paper describing the architecture was quite a breakthrough. At the time, the best models used convolutions, recurrence, attention and encoder/decoder. The paper title was “attention is all you need” and that is basically the conclusion [VSP+17]. They found that multi-head attention (including self-attention) was what mattered and this led to transformers. Transformers are simple and scalable because each layer is nearly the same operation. This has led to simple “scaling-up the language model” resulting in things like GPT-3, which has billions of parameters and cost millions of dollars to train. GPT-3 is also surprisingly good and versatile. The single model is able to answer questions, describe computer code, translate languages, and infer recipe instructions for cookies. I highly recommend reading the paper, it’s quite interesting[BMR+20].

There are two principles from the transformer that interest us. One is of course that it is a simple and effective replacement for RNNs. The second is that the transformer considers the whole sequence simultaneously. This has a few consequences. The first is that it is again input size dependent. However, we can pad and mask to get around that. The second consequence is that the self-supervised/unsupervised training can be more interesting than just predict the next character in the string. Instead, we can randomly delete characters and ask the transformer to infer the missing character. This is how transformers are typically “pre-trained” – by feeding a bunch of masked sequences to teach the transformer the language. Then, if desired, the transformer can be refined with labels on your specific task. Transformers and their pre-training training procedure have led to pre-trained chemistry specific models that can be downloaded and used immediately on chemistry data, like ChemBERTa [CGR20]. These pre-trained models have been trained on 77 million molecules and so should already have some “intuition” about molecular structures and they indeed do well on supervised learning tasks.

13.6.1. Architecture#

The transformer is fundamentally made-up of layers of multi-head attention blocks as discussed in Attention Layers. You can get a detailed overview of the transformer architecture here. The overall architecture is an encoder/decoder like seen in Variational Autoencoder. Like the variational autoencoder, the decoder portion can be discarded and only the encoder is used for supervised tasks. Thus, you might pre-train the encoder/decoder with self-supervised training (strings with withheld characters) on a large dataset without labels and then use only the encoder for a regression tasks with a smaller dataset.

What exactly is going in and out of the encoder/decoder? The transformer is an example of a sequence to sequence (seq2seq) model and the most obvious interpretation is translating between two languages like English to French. The encoder takes in English and the decoder produces French. Or maybe SMILES to IUPAC name. However, that requires “labels” (the paired sequence). To do self-supervised training pre-training, we need the input to the encoder to be a sequence missing some values and the decoder output to be the same sequence with probabilities for each position values filled in. This is called masked self-supervised training. If you pre-train in this way, you can do two tasks with your pre-trained encoder/decoder. You can use the encoder alone as a way to embed a string into real numbers and then a downstream task like predicting a molecule’s enthalpy of formation from its SMILES string. The other way to use a model trained this way is for autoregressive generation. The input might be a few characters or a prompt [RM21] specifically crafted like a question. This is similar the generative RNN, although it allows more flexibility.

There are many details to transformers and “hand-tuned” hyperparameters. Examples in modern transformers are layer normalizations (similar to batch normalization), embeddings, dropout, weight decay, learning rate decay, and position information encoding [LOG+19]. Position information is quite an interesting topic – you need to include the location of a token (character) in its embedding. Was it the first character or last character? This is key because when you compute the attention between tokens, the relative location is probably important. Some recent promising work proposed a kind of phase/amplitude split, where the position is the phase and the amplitude is the embedding called rotary positional encodings[SLP+21].

If you would like to see how to implement a real transformer with most of these details, take a look at this Keras tutorial. Because transformers are so tightly coupled with pre-training, there has been a great deal of effort in pre-training models. Aside from GPT-3, a general model pre-trained on an enormous corpus of billions of sequences from multiple languages, there are many language specific pre-trained models. Hugging Face is a company and API that hosts pre-trained transformers for specific language models like Chinese language, XML, SMILES, or question and answer format. These can be quickly downloaded and utilized, enabling rapid use of state-of-the art language models.

13.7. Using the Latent Space for Design#

One of the most interesting applications of these encoder/decoder seq2seq models in chemistry is their use for doing optimal design of a molecule. We pre-train an encoder/decoder pair with masking. The encoder brings our molecule to a continuous representation (seq2vec). Then we can do regression in this vector space for whatever property we would like (e.g., solubility). Then we can optimize this regressed model, finding an input vector that is a minimum or maximum, and finally convert that input vector into a molecule using the decoder [GomezBWD+18]. The vector space output by the encoder is called the latent space like we saw in Variational Autoencoder. Of course, this works for RNN seq2seq models, transformers, or convolutions.

13.8. Representing Materials as Text#

Materials are an interesting problem for deep learning because they are not defined by a single molecule. There can be information like the symmetry group or components/phases for a composite material. This creates a challenge for modeling, especially for real materials that have complexities like annealing temperature, additives, and age. From a philosophical point of view, a material is defined by how it was constructed. Practically that means a material is defined by the text describing its synthesis [BDC+18]. This is an idea taken to its extreme in Tshitoyan et al. [TDW+19] who found success in representing thermoelectrics via the text describing their synthesis [SC16]. This work is amazing to me because they had to manually collect papers (publishers do not allow ML/bulk download on articles) and annotate the synthesis methods. Their seq2vec model is relatively old (2 years!) and yet there has not been much progress in this area. I think this is a promising direction but challenging due to the data access limitations. For example, recent progress by Friedrich et al. [FAT+20] built a pre-trained transformer for solid oxide fuel cells materials but their corpus was limited to open access articles (45) over a 7 year period. This is one critical line of research that is limited due to copyright issues. Text can be copyrighted, not data, but maybe someday a court can be convinced that they are interchangeable.

13.9. Applications#

As discussed above, molecular design has been one of the most popular areas for sequence models in chemistry [SKTW18, GomezBWD+18, MFGS18]. Transformers have been found to be excellent at predicting chemical reactions. Schwaller et al. [SPZ+20] have shown how to do retrosynthetic pathway analysis with transformers. The transformers take as input just the reactants and reagents and can predict the products. The models can be calibrated to include uncertainty estimates [SLG+19] and predict synthetic yield [SVLR20]. Beyond taking molecules as input, Vaucher et al. trained a seq2seq transformer that can translate the unstructured methods section of a scientific paper into a set of structured synthetic steps [VZG+20]. Finally, Schwaller et al. [SPV+21] trained a transformer to classify reactions into organic reaction classes leading to a fascinating map of chemical reactions.

13.10. Summary#

Text is a natural representation of both molecules and materials

SMILES and SELFIES are ways to convert molecules into strings

Recurrent neural networks (RNNs) are an input-length independent method of converting strings into vectors for regression or classification

RNNs can be trained in seq2seq (encoder/decoder) setting by having it predict the next character in a sequence. This yields a model that can autoregressively generate new sequences/molecules

Withholding or masking sequences for training is called self-supervised training and is a pre-training step for seq2seq models to enable them to learn the properties of a language like English or SMILES

Transformers are currently the best seq2seq models

The latent space of seq2seq models can be used for molecular design

Materials can be represented as text which is a complete representation for many materials

13.11. Cited References#

- Wei88

David Weininger. Smiles, a chemical language and information system. 1. introduction to methodology and encoding rules. Journal of chemical information and computer sciences, 28(1):31–36, 1988.

- SKE19

Murat Cihan Sorkun, Abhishek Khetan, and Süleyman Er. AqSolDB, a curated reference set of aqueous solubility and 2D descriptors for a diverse set of compounds. Sci. Data, 6(1):143, 2019. doi:10.1038/s41597-019-0151-1.

- YCW20

Ziyue Yang, Maghesree Chakraborty, and Andrew D White. Predicting chemical shifts with graph neural networks. bioRxiv, 2020.

- KGrossGunnemann20

Johannes Klicpera, Janek Groß, and Stephan Günnemann. Directional message passing for molecular graphs. In International Conference on Learning Representations. 2020.

- VSP+17

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. In Advances in neural information processing systems, 5998–6008. 2017.

- KHN+20(1,2)

Mario Krenn, Florian Häse, AkshatKumar Nigam, Pascal Friederich, and Alan Aspuru-Guzik. Self-referencing embedded strings (SELFIES): a 100% robust molecular string representation. Machine Learning: Science and Technology, 1(4):045024, nov 2020. URL: https://doi.org/10.1088/2632-2153/aba947, doi:10.1088/2632-2153/aba947.

- BFSV19

Nathan Brown, Marco Fiscato, Marwin HS Segler, and Alain C Vaucher. Guacamol: benchmarking models for de novo molecular design. Journal of chemical information and modeling, 59(3):1096–1108, 2019.

- OBoyle12

Noel M O’Boyle. Towards a universal smiles representation-a standard method to generate canonical smiles based on the inchi. Journal of cheminformatics, 4(1):1–14, 2012.

- RZS20(1,2)

Kohulan Rajan, Achim Zielesny, and Christoph Steinbeck. Decimer: towards deep learning for chemical image recognition. Journal of Cheminformatics, 12(1):1–9, 2020.

- CGR20(1,2,3)

Seyone Chithrananda, Gabe Grand, and Bharath Ramsundar. Chemberta: large-scale self-supervised pretraining for molecular property prediction. arXiv preprint arXiv:2010.09885, 2020.

- KNM+22

Jinwoo Kim, Tien Dat Nguyen, Seonwoo Min, Sungjun Cho, Moontae Lee, Honglak Lee, and Seunghoon Hong. Pure transformers are powerful graph learners. arXiv preprint arXiv:2207.02505, 2022.

- Bal11

Philip Ball. Beyond the bond. Nature, 469(7328):26–28, 2011.

- SKTW18(1,2,3)

Marwin HS Segler, Thierry Kogej, Christian Tyrchan, and Mark P Waller. Generating focused molecule libraries for drug discovery with recurrent neural networks. ACS central science, 4(1):120–131, 2018.

- GomezBWD+18(1,2,3)

Rafael Gómez-Bombarelli, Jennifer N Wei, David Duvenaud, José Miguel Hernández-Lobato, Benjamín Sánchez-Lengeling, Dennis Sheberla, Jorge Aguilera-Iparraguirre, Timothy D Hirzel, Ryan P Adams, and Alán Aspuru-Guzik. Automatic chemical design using a data-driven continuous representation of molecules. ACS central science, 4(2):268–276, 2018.

- BMR+20

Tom B Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, and others. Language models are few-shot learners. arXiv preprint arXiv:2005.14165, 2020.

- TDG+21

Yi Tay, Mostafa Dehghani, Jai Gupta, Dara Bahri, Vamsi Aribandi, Zhen Qin, and Donald Metzler. Are pre-trained convolutions better than pre-trained transformers? arXiv preprint arXiv:2105.03322, 2021.

- RM21

Laria Reynolds and Kyle McDonell. Prompt programming for large language models: beyond the few-shot paradigm. arXiv preprint arXiv:2102.07350, 2021.

- WWCF21

Yuyang Wang, Jianren Wang, Zhonglin Cao, and Amir Barati Farimani. Molclr: molecular contrastive learning of representations via graph neural networks. arXiv preprint arXiv:2102.10056, 2021.

- LOG+19

Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. Roberta: a robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692, 2019.

- SLP+21

Jianlin Su, Yu Lu, Shengfeng Pan, Bo Wen, and Yunfeng Liu. Roformer: enhanced transformer with rotary position embedding. arXiv preprint arXiv:2104.09864, 2021.

- BDC+18

Keith T Butler, Daniel W Davies, Hugh Cartwright, Olexandr Isayev, and Aron Walsh. Machine learning for molecular and materials science. Nature, 559(7715):547–555, 2018.

- TDW+19

Vahe Tshitoyan, John Dagdelen, Leigh Weston, Alexander Dunn, Ziqin Rong, Olga Kononova, Kristin A Persson, Gerbrand Ceder, and Anubhav Jain. Unsupervised word embeddings capture latent knowledge from materials science literature. Nature, 571(7763):95–98, 2019.

- SC16

Matthew C Swain and Jacqueline M Cole. Chemdataextractor: a toolkit for automated extraction of chemical information from the scientific literature. Journal of chemical information and modeling, 56(10):1894–1904, 2016.

- FAT+20

Annemarie Friedrich, Heike Adel, Federico Tomazic, Johannes Hingerl, Renou Benteau, Anika Maruscyk, and Lukas Lange. The sofc-exp corpus and neural approaches to information extraction in the materials science domain. arXiv preprint arXiv:2006.03039, 2020.

- MFGS18

Daniel Merk, Lukas Friedrich, Francesca Grisoni, and Gisbert Schneider. De novo design of bioactive small molecules by artificial intelligence. Molecular informatics, 37(1-2):1700153, 2018.

- SPZ+20

Philippe Schwaller, Riccardo Petraglia, Valerio Zullo, Vishnu H Nair, Rico Andreas Haeuselmann, Riccardo Pisoni, Costas Bekas, Anna Iuliano, and Teodoro Laino. Predicting retrosynthetic pathways using transformer-based models and a hyper-graph exploration strategy. Chemical Science, 11(12):3316–3325, 2020.

- SLG+19

Philippe Schwaller, Teodoro Laino, Théophile Gaudin, Peter Bolgar, Christopher A Hunter, Costas Bekas, and Alpha A Lee. Molecular transformer: a model for uncertainty-calibrated chemical reaction prediction. ACS central science, 5(9):1572–1583, 2019.

- SVLR20

Philippe Schwaller, Alain C Vaucher, Teodoro Laino, and Jean-Louis Reymond. Prediction of chemical reaction yields using deep learning. ChemRxiv Preprint, 2020. URL: https://doi.org/10.26434/chemrxiv.12758474.v2.

- VZG+20

Alain C Vaucher, Federico Zipoli, Joppe Geluykens, Vishnu H Nair, Philippe Schwaller, and Teodoro Laino. Automated extraction of chemical synthesis actions from experimental procedures. Nature communications, 11(1):1–11, 2020.

- SPV+21

Philippe Schwaller, Daniel Probst, Alain C Vaucher, Vishnu H Nair, David Kreutter, Teodoro Laino, and Jean-Louis Reymond. Mapping the space of chemical reactions using attention-based neural networks. Nature Machine Intelligence, pages 1–9, 2021.